Getting started with the C++ SDK#

1. Introduction

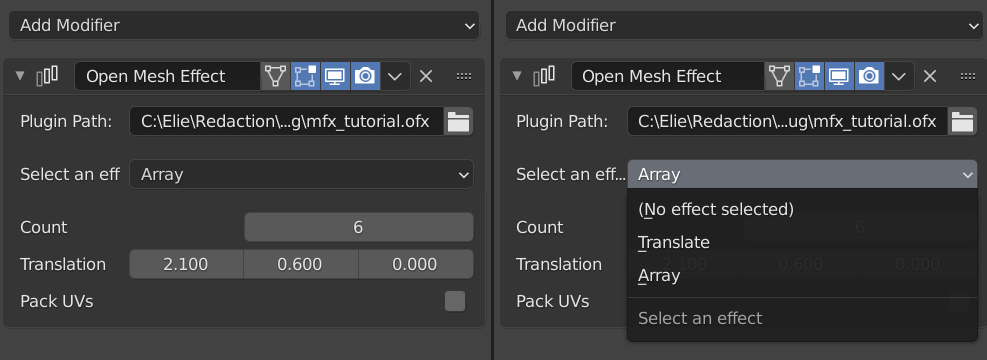

Although one can use the raw C API, the C++ SDK is the easiest way to create an OpenMfx plugin. We'll see in this tutorial how to write a simple plugins with a few effects bundled in it that you may then load as a modifier in the OpenMfx branch of Blender.

NB This tutorial follows the principle of literate programming, which means that it is written primarily for human reading, but is also a complete and valid code base if one follows the {Curly bracket titles} to browse through code blocks. The code generated from this text is available in the git repository.

2. Setup

Setting the project up is pretty straightforward. We create a directory, download the SDK, and use a simple CMake file to build it.

Create the root directory for your project. It usually starts with "Mfx":

Get the OpenMfx repository, either as a submodule or by downloading it as a zip. This contains C++ SDK in the CppPluginSupport subdirectory.

git init git submodule add https://github.com/eliemichel/OpenMfx

Used in section 1

In this example, we'll use CMake as a build system, so create this minimal CMakeLists.txt file configuring the project:

cmake_minimum_required(VERSION 3.0...3.18.4) # Name of the project project(MfxTutorial) # Add OpenMfx to define CppPluginSupport, the C++ helper API add_subdirectory(OpenMfx) # Define the plugin target, called for instance mfx_tutorial # You may list additional source files after "plugin.cpp" add_library(mfx_tutorial SHARED plugin.cpp) # Set up the target to depend on CppPluginSupport and output a file # called .ofx (rather than the standard .dll or .so) target_link_libraries(mfx_tutorial PRIVATE CppPluginSupport) set_target_properties(mfx_tutorial PROPERTIES SUFFIX ".ofx")

To build the plugin, follow the usual cmake workflow:

This CMakeList tells that the source code for the plugin will be in plugin.cpp, so create such a file.

At the very least, this file must do two things:

(i) Define effects. Effects are defined by subclassing the MfxEffect class provided by the SDK:

#include <PluginSupport/MfxEffect>

class TranslateEffect : public MfxEffect {

{Behavior of TranslateEffect, 8}

};

Used in section 7

(ii) Register effects into the final binary. This is actually a macro handling the boilerplate required to expose the correct symbols in the final library, but you don't have to bother about the details.

3. Core components of an effect

An effect class like TranslateEffect will at least override the two main methods Describe and Cook. The former defines the effect inputs, outputs and parameters, while the former implements the core process that computes the outputs.

protected:

OfxStatus Describe(OfxMeshEffectHandle descriptor) override {

{Describe, 10}

}

OfxStatus Cook(OfxMeshEffectHandle instance) override {

{Cook, 13}

}

Added to in section 9

Used in section 6

We may also override the GetName() method to set the name displayed to the end user when selecting the effect.

public:

const char* GetName() override {

return "Translate";

}

Added to in section 9

Used in section 6

3.1. Describing the effect

The Describe method is called only once upon loading, and does not depend on the actual content of the input.

We first define a single input and output, using the standardized kOfxMeshMainInput and kOfxMeshMainOutput names, and we could add extra ones with arbitrary names:

AddInput(kOfxMeshMainInput); AddInput(kOfxMeshMainOutput);

Added to in sections 11 and 12

Used in section 8

NB What the API calls "input" is actually any kind of slot, including both inputs and outputs.

Then we define a few parameters that will be exposed for the user to tune the behavior of our effect. The type of parameters is inferred from their default value.

// Add a vector3 parameter

AddParam("translation", double3{0.0, 0.0, 0.0})

.Label("Translation") // Name used for display

.Range(double3{-10.0, -10.0, -10.0}, double3{10.0, 10.0, 10.0});

// Add a bool parameter

AddParam("tickme", false)

.Label("Tick me!");

Added to in sections 11 and 12

Used in section 8

Finally, we tell that everything went OK. Other values are possible, in case of error. (See the definition of OfxStatus in ofxCore.h.)

3.2. Cooking

Once in the Cook we can have access to the parameter values and input data. We first retrieve these, then allocate the output mesh, and finally fill it. At the very end we also don't forget to release input/output memory.

{Get Inputs, 14} {Get Parameters, 16} {Estimate output size, 18} {Allocate output, 19} {Fill in output, 21} {Release data, 22} return kOfxStatOK;

Used in section 8

3.2.a. Get Inputs

Getting the input is as fast as calling GetInput but then we need to think about what information we need from this input.

MfxMesh input_mesh = GetInput(kOfxMeshMainInput).GetMesh();

Added to in section 15

Used in section 13

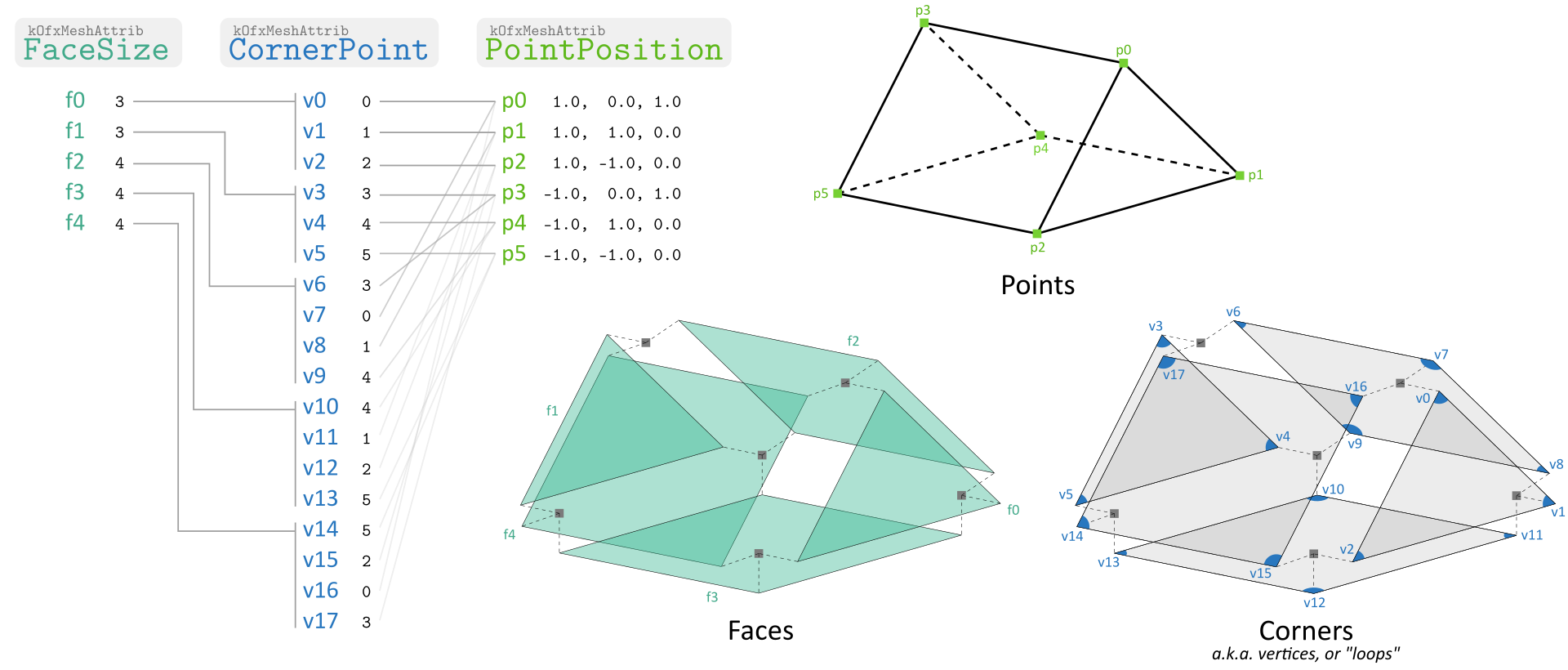

An input contains attributes that are data attached to either points, (face) corners, faces or the mesh itself. Attribute could have any name, but some are standardized. For instance the point attribute kOfxMeshAttribPointPosition contains the position of points.

MfxAttributeProps input_positions; input_mesh.GetPointAttribute(kOfxMeshAttribPointPosition) .FetchProperties(input_positions);

Added to in section 15

Used in section 13

NB The type MfxAttributeProps contains actual data and one should avoid copying it around, which is why it is not returned but rather provided by reference to FetchProperties(). Other types so far were blind handles occupying little memory.

In our final example, we'll duplicate and translate the input geometry, but for now let's focus only on the translation, for which the position attribute is all we need.

3.2.b. Get Parameters

Parameters are identified by the name used to create them. It is required to specify the type again though. From the handle returned by GetParam() one can get the current value of the parameter with GetValue():

MfxParam<double3> translation_param = GetParam<double3>("translation");

double3 translation = translation_param.GetValue();

Redefined in section 17

Used in section 13

Or, in a more compact way:

double3 translation = GetParam<double3>("translation").GetValue();

Redefined in section 17

Used in section 13

3.2.c. Allocate Output

Since in this first part we only translate the mesh, the size of the output is known already -- we copy properties from the input:

MfxMeshProps input_mesh_props; input_mesh.FetchProperties(input_mesh_props); int output_point_count = input_mesh_props.pointCount; int output_corner_count = input_mesh_props.cornerCount; int output_face_count = input_mesh_props.faceCount; // Extra properties related to memory usage optimization int output_no_loose_edge = input_mesh_props.noLooseEdge; int output_constant_face_size = input_mesh_props.constantFaceSize;

Used in section 13

We can then allocate memory using the Allocate() method. Note that some objects like MfxAttributes have methods that can be called either only before or only after the memory allocation. Forwarding attributes, for instance, must occur priori to allocation (see bellow).

MfxMesh output_mesh = GetInput(kOfxMeshMainOutput).GetMesh();

{Forward Attributes, 20}

output_mesh.Allocate(

output_point_count,

output_corner_count,

output_face_count,

output_no_loose_edge,

output_constant_face_size);

Used in section 13

NB The last two arguments of Allocate are optional, we'll come back on them later on.

An output mesh needs at least the attributes kOfxMeshAttribPointPosition (on points), kOfxMeshAttribCornerPoint (on corners) and kOfxMeshAttribFaceSize (on faces, telling the number of corners per face).

Although we'll modify the position of the points, the two others attributes -- defining the connectivity of the mesh -- will remain identical. In such a case, we can forward them to the output, so that the same memory buffer is reused, without any copy:

output_mesh.GetCornerAttribute(kOfxMeshAttribCornerPoint) .ForwardFrom(input_mesh.GetCornerAttribute(kOfxMeshAttribCornerPoint)); output_mesh.GetFaceAttribute(kOfxMeshAttribFaceSize) .ForwardFrom(input_mesh.GetFaceAttribute(kOfxMeshAttribFaceSize));

Used in section 19

3.2.d. Computing Output

Once the output mesh has been allocated, we can get the data field of the MfxAttributeProps of the output points to a valid buffer that we can freely fill in.

MfxAttributeProps output_positions;

output_mesh.GetPointAttribute(kOfxMeshAttribPointPosition)

.FetchProperties(output_positions);

// (NB: This can totally benefit from parallelization using e.g. OpenMP)

for (int i = 0 ; i < output_point_count ; ++i) {

float *in_p = reinterpret_cast<float*>(input_positions.data + i * input_positions.stride);

float *out_p = reinterpret_cast<float*>(output_positions.data + i * output_positions.stride);

out_p[0] = in_p[0] + translation[0];

out_p[1] = in_p[1] + translation[1];

out_p[2] = in_p[2] + translation[2];

}

Used in section 13

And finally we release the meshes. This will internally convert meshes to the host's representation (which for some attributes is the same).

This concludes our first effect, which translates the object.

4. Array Modifier

We will now see a slightly more complicated example in which we will need to deal with the connectivity information that in the Translation example we could simply forward.

4.1. Setup and describe

The base skeleton remains similar:

class ArrayEffect : public MfxEffect {

public:

const char* GetName() override {

return "Array";

}

protected:

OfxStatus Describe(OfxMeshEffectHandle descriptor) override {

{Describe ArrayEffect Inputs, 24}

{Describe ArrayEffect Parameters, 25}

return kOfxStatOK;

}

OfxStatus Cook(OfxMeshEffectHandle instance) override {

{Cook ArrayEffect, 26}

}

};

Used in section 7

Nothing new in the description, we define the standard input/output and some parameters:

AddInput(kOfxMeshMainInput); AddInput(kOfxMeshMainOutput);

Redefined in section 35

Used in section 23

// Number of copies

AddParam("count", 2)

.Label("Count")

.Range(0, 2147483647);

// Translation added at each copy

AddParam("translation", double3{0.0, 0.0, 0.0})

.Label("Translation")

.Range(double3{-10.0, -10.0, -10.0}, double3{10.0, 10.0, 10.0});

Added to in section 34

Used in section 23

4.2. Cooking output with varying connectivity

The major change compared with the previous effect lies in the part that fills it in.

{Get Array inputs and parameters, 27} {Allocate Array output, 28} {Fill in Array output, 29} output_mesh.Release(); input_mesh.Release(); return kOfxStatOK;

Used in section 23

Getting input and parameter is pretty straightforward:

MfxMesh input_mesh = GetInput(kOfxMeshMainInput).GetMesh();

MfxMeshProps input_props;

input_mesh.FetchProperties(input_props);

int count = GetParam<int>("count").GetValue();

double3 translation = GetParam<double3>("translation").GetValue();

Added to in sections 36 and 37

Used in section 26

Allocating memory just requires to multiply everything by the count. We don't forward anything this time.

MfxMesh output_mesh = GetInput(kOfxMeshMainOutput).GetMesh();

{Add extra output attributes, 38}

output_mesh.Allocate(

input_props.pointCount * count,

input_props.cornerCount * count,

input_props.faceCount * count,

input_props.noLooseEdge,

input_props.constantFaceSize);

Used in section 26

This time we need to fill not only the output positions but also the connectivity information. The latter is provided by first the kOfxMeshAttribFaceSize that gives for each face its number of corners. The corners are organized sequentially: corner 1 of face 1, corner 2 of face 1, ..., corner 1 of face 2, etc. Each corner corresponds to a point given by kOfxMeshAttribCornerPoint. So we get these attributes both from the input and from the output:

MfxAttributeProps input_positions, input_corner_points, input_face_size; input_mesh.GetPointAttribute(kOfxMeshAttribPointPosition).FetchProperties(input_positions); input_mesh.GetCornerAttribute(kOfxMeshAttribCornerPoint).FetchProperties(input_corner_points); input_mesh.GetFaceAttribute(kOfxMeshAttribFaceSize).FetchProperties(input_face_size); MfxAttributeProps output_positions, output_corner_points, output_face_size; output_mesh.GetPointAttribute(kOfxMeshAttribPointPosition).FetchProperties(output_positions); output_mesh.GetCornerAttribute(kOfxMeshAttribCornerPoint).FetchProperties(output_corner_points); output_mesh.GetFaceAttribute(kOfxMeshAttribFaceSize).FetchProperties(output_face_size);

Added to in sections 30 and 39

Used in section 26

Important note: when all faces have the same number of corners (typically they are all triangles or quads), the host may set the constantFaceSize property to a non negative value. In such a case, the kOfxMeshAttribFaceSize attribute must not be used (in order to save up memory).

for (int k = 0 ; k < count ; ++k) {

{Fill positions for the k-th copy, 31}

{Fill corner_points for the k-th copy, 32}

if (input_props.constantFaceSize < 0) {

{Fill face_size for the k-th copy, 33}

}

}

Added to in sections 30 and 39

Used in section 26

Filling the output positions is not so different from the Translation effect, just be careful with the indices:

for (int i = 0 ; i < input_props.pointCount ; ++i) {

int j = i + k * input_props.pointCount;

float *in_p = reinterpret_cast<float*>(input_positions.data + i * input_positions.stride);

float *out_p = reinterpret_cast<float*>(output_positions.data + j * output_positions.stride);

out_p[0] = in_p[0] + translation[0] * k;

out_p[1] = in_p[1] + translation[1] * k;

out_p[2] = in_p[2] + translation[2] * k;

}

Used in section 30

Each k-th copy of the corner references the copy k-th copy of the points, so adds an offset k * input_props.pointCount to the value

for (int i = 0 ; i < input_props.cornerCount ; ++i) {

int j = i + k * input_props.cornerCount;

int *in_p = reinterpret_cast<int*>(input_corner_points.data + i * input_corner_points.stride);

int *out_p = reinterpret_cast<int*>(output_corner_points.data + j * output_corner_points.stride);

out_p[0] = in_p[0] + k * input_props.pointCount;

}

Used in section 30

Face sizes are just a simple repetition original values. If these values are contiguous in memory (i.e. the stride is sizeof(int)) this can be speed up using memcpy.

for (int i = 0 ; i < input_props.faceCount ; ++i) {

int j = i + k * input_props.faceCount;

int *in_p = reinterpret_cast<int*>(input_face_size.data + i * input_face_size.stride);

int *out_p = reinterpret_cast<int*>(output_face_size.data + j * output_face_size.stride);

out_p[0] = in_p[0];

}

Used in section 30

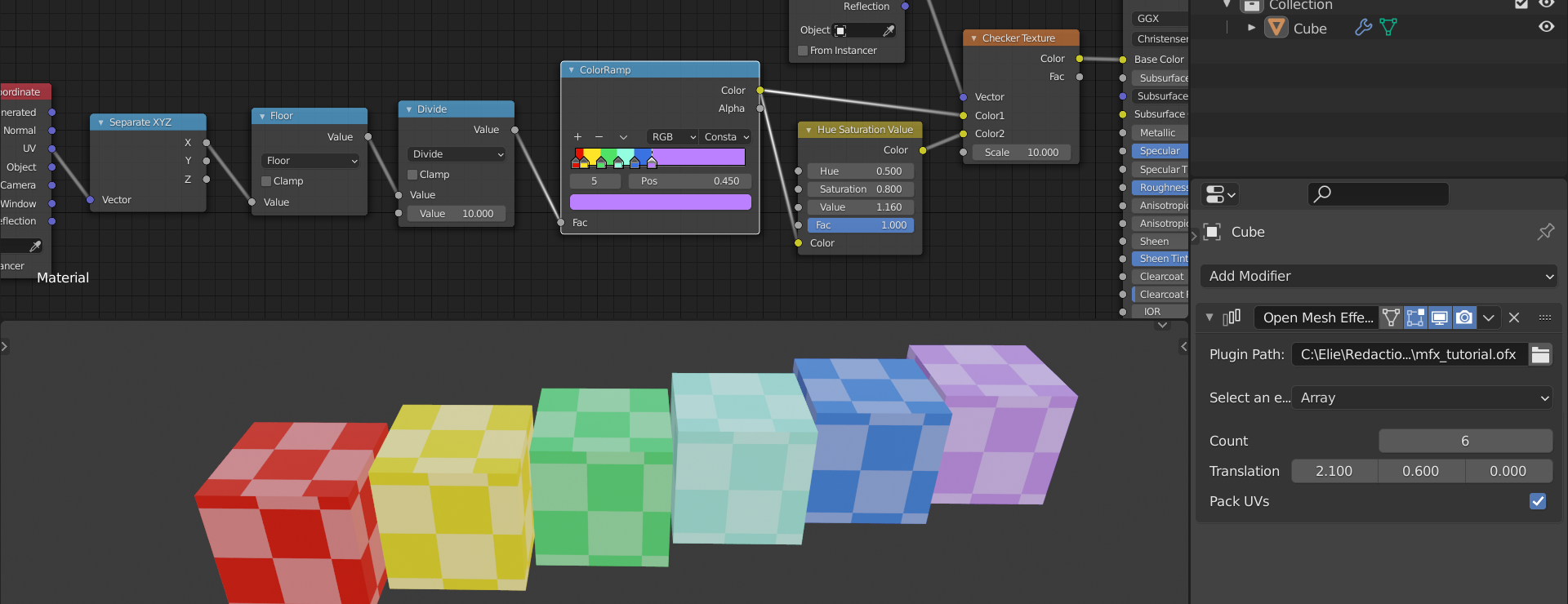

5. Manipulating UVs

We'll now add a feature to our Array effect: offsetting UVs of the instances so that they don't overlap. Let's first add an option to turn this on or off:

AddParam("offset_uv", false)

.Label("Offset UVs");

Added to in section 34

Used in section 23

Then we will also need to tell the host that we will manipulate UV-related attributes. The attributes we've been using so far were the three mandatory attributes, so we did not have to, but for other attributes, one must call RequestAttribute().

AddInput(kOfxMeshMainInput) .RequestCornerAttribute( "uv0", 2, MfxAttributeType::Float, MfxAttributeSemantic::TextureCoordinate, false /* not mandatory */ ); AddInput(kOfxMeshMainOutput);

Redefined in section 35

Used in section 23

The semantic MfxAttributeSemantic::TextureCoordinate may allow the host to display a context sensitive attribute picker to the user. Here we mean that the attribute should ideally (not must because we did not ask it to be mandatory) be a float2, represent texture coordinates, and that we'll refer to it as "uv0" during the cooking step.

We may now retrieve this attribute at cook time. Since this attribute was not declared mandatory, we must check whether it is available with HasAttribute().

bool offset_uv = GetParam<bool>("offset_uv").GetValue();

Added to in sections 36 and 37

Used in section 26

MfxAttributeProps input_uv;

if (offset_uv && input_mesh.HasCornerAttribute("uv0")) {

input_mesh.GetCornerAttribute("uv0").FetchProperties(input_uv);

if (input_uv.componentCount < 2 || input_uv.type != MfxAttributeType::Float) {

offset_uv = false; // incompatible type, so we deactivate this feature

}

} else {

offset_uv = false; // no uv available, so here again we deactivate this feature

}

Added to in sections 36 and 37

Used in section 26

We must also add this UV attribute to the output. This must be done before memory allocation (so that there is memory allocated for this attribute).

if (offset_uv) {

output_mesh.AddCornerAttribute(

"uv0",

2, MfxAttributeType::Float,

MfxAttributeSemantic::TextureCoordinate

);

}

Used in section 28

Here again, a semantic hint is added to mean that this attribute should be interpreted by the host as a texture coordinate.

NB While input attributes are requested in the Describe method, output attributes are created at cook time. This allows the effect to dynamically chose to add attributes or not.

Once allocated, we can now manipulate UV data:

if (offset_uv) {

MfxAttributeProps output_uv;

output_mesh.GetCornerAttribute("uv0").FetchProperties(output_uv);

for (int k = 0 ; k < count ; ++k) {

{Offset UVs of k-th instance, 40}

}

}

Added to in sections 30 and 39

Used in section 26

We simply move UVs of other instance along the U axis:

for (int i = 0 ; i < input_props.cornerCount ; ++i) {

int j = i + k * input_props.cornerCount;

float *in_p = reinterpret_cast<float*>(input_uv.data + i * input_uv.stride);

float *out_p = reinterpret_cast<float*>(output_uv.data + j * output_uv.stride);

out_p[0] = in_p[0] + k;

out_p[1] = in_p[1];

}

Used in section 39

6. Conclusion

You are thus able to write OpenMfx plugins for any compatible host using C++. If you encounter any issue during the process feel free to report it on GitHub: github.com/eliemichel/OpenMfx/issues.